Wednesday, December 28, 2005

Ok vs. Silence

One of their decisions speaks volumes about the philosophy that suffuses much of Microsoft software today. Because memory was so tight, they couldn't afford the number of bytes to print the traditional "READY>" on the screen, so they chose "Ok>" instead. If any of you remember using the BASIC built-in to the original PC, you'll remember that "Ok>" prompt.

Why say anything at all? If resources were tight, why not just provide a simple prompt when everything is OK? Like ">"? This is exactly what the Unix guys did when they needed to be frugal with resources -- they invented the idea that "no news is good news".

Take this simple distinction, "Ok" vs. "Silence", and multiply it by millions of lines of code and decades of development. What you end up with is the most annoyingly chatty software you can imagine, Windows and Office. They constantly pester me about things I don't care about ("You have un-used icons on your desktop", "You're network cable is unplugged", "Let me go harvest clip-art from the Internet for you", etc.). An alternative is Mac OS X, which is sublimely quiet most of the time, and of course command-line Unix which is positively mute (until it needs to tell you something that you really need to know).

Obviously, chatty software annoys me (I've blogged about shortcuts to turn off some of the annoying balloons in Windows before). But I had never thought about why it is that way until I remembered that, when given the choice between silence and verbosity, Bill and Paul chose the latter.

Sunday, December 18, 2005

Bearable Moments

Which makes Christopher Judd's Bearable Moments such a remarkable achievement. I've known Chris for years, and he's written a couple of fine technical books (Pro Eclipse JST: Plug-ins for J2EE Development and Enterprise Java Development on a Budget: Leveraging Java Open Source Technologies. Now, he's written his first children's book, Bearable Moments. One of the most daunting tasks facing any writer (no matter what the genre) is understanding and empathizing with the intended audience. To me, that makes writing a children's book one of the toughest jobs a writer can face. Hat's off to Chris -- I hope his venture into non-technical publishing garners him the accolades he deserves.

Friday, December 16, 2005

Is Struts Over?

I bring this up for 2 reasons. First, I think the original Struts succeeded in no small part because it was simple. If you knew how request-response worked, and you knew a few design patterns, you could figure out most of Struts in about a day. Meanwhile, the competition (Tapestry, Turbine, WebWork) were a bit more complex. This is back in the day when people weren't even sure you needed a web framework -- most people were just rolling their own. Along came Struts, which managed to hit the sweet spot of solving some problems without being too big or complex. If you spend some time with them, I think that WebWork and Tapestry are better conceived frameworks (with totally opposite approaches). Note that this was a million years before Ruby on Rails was even a glint in DHH's eyes.

The second reason I bring this up was because I found myself in a Birds of a Feather session on Struts at ApacheCon. And my distinct impression was that of moving deck chairs on the Titanic. The big news of late, of course, is the merging of WebWork into Struts. But, at the same time, the Shale extension of Struts is rolling right along. There was a great deal of discussion about how to manage to reconcile these two fundamentally different paths (action framework vs. component framework). And, unfortunately, all these efforts apparently will appear under the Struts name.

I understand the software geek drive to always reach higher and further. But I think you also need objective perspective about what you've created. Based on my observations, the Struts team has completely lost that perspective. My solution? Keep Struts right where it is, a nice simple web framework with very low barrier to entry. Go ahead and enhance WebWork (and call it WebWork), and go ahead and work on Shale (and call it Shale). Trying to make Struts into an integration platform with lots of complex moving parts kills the one thing that Struts truly has going for it: simplicity in the face of ever growing complexity in the web framework space. For the polar opposite of simplicity, see Faces, JavaServer.

Thursday, December 15, 2005

Ruby for Java Developers at ApacheCon

The appointed time arrived, and I wasn't disappointed. They booked it in the large keynote room, and I had over 100 people for the talk. I had to rush a bit because I had too much material (I'm sure those of you who know me will be shocked by that), but the talk turned out really well. I ran up to and slightly into the afternoon coffee break, so I made the offer for people to stick around and ask questions after the talk. I had about 10 people come up and ask me about various aspects of Ruby, and everyone was interested and engaged.

I've been positioning myself both within ThoughtWorks and externally as a pragmatist on Ruby. I really like Ruby, but I'm not ready to sell the farm on more mainstream languages yet. Ruby has tremendous potential, but there are still hard problems to be solved in Ruby before it can storm the castle walls of Java and .NET. I fully believe that those problems will fall (and I think ThoughtWorks will solve many of them, just as we solved problems in Java and .NET). Of all the candidates for "Next Big Thing", Ruby is the clear, ahead of the pack contender.

Monday, December 12, 2005

Stalking Dr. Hawking

I understand the desire to meet and talk to the people I admire. But, going up to a famous person and being able to enter a real conversation with them is absolutely impossible. Because nuts stalk famous people, they must always be on their guard.

Because I feel this way, I have never approached anyone to shake their hand or get an autograph -- it's utterly meaningless. Besides, there are very few people in the world whos stature I would consider worthy of an autograph. I almost walked over one of them a couple of weeks ago.

During my last trip to San Francisco, my friend Terry and I went to Beethoven's opera Fidelio. Terry was calling home, so I was walking around the beautiful opera house. As I rounded a corner, I came upon a really elaborate wheel chair. I thought to myself "Wow, that's a really elaborate Stehen-Hawking-style wheel chair". Then I walked around the front and nearly bumped into...Stephen Hawking! I remembered reading that he was in town lecturing in San Jose while I was in San Francisco. Of course, even if I wanted to get something silly like an autograph, his advanced state of Lou Gerhig's disease would make it cumbersome. As much as I'm not star struck, it was a little amazing to be so close to such a great man. If ever there was someone worthy of adoration, I would vote for him long before the pop star of the week. I hope he enjoyed the opera!

Sunday, December 04, 2005

A Year at the No Fluff Round Table

This has been a great year for me at No Fluff. I ended up doing 14 shows this year, several more than I had planned, but I'm certainly glad I did. I've blogged about the extraordinary level of attendees before, and they help make the weekend fly by. But the other thing that drives me from my home for a dozen+ weekends a year is the chance to get to hang out with the other speakers.

Back in the 1920's, a group of writers, actors, and other artists started gathering a few times a week at the Algonquin hotel restaurant. This group included Dorothy Parker and Harpo Marx, among others (for a great movie that depicts this group and era, check out Mrs. Parker and the Vicious Circle). According to legend, the Algonquin Round table discussions were the wittiest in history because they had gathered the quickest wit in New York at that time, which was the best in the world. Here are a few samples:

Robert Sherwood, reviewing cowboy hero Tom Mix: "They say he rides as if he’s part of the horse, but they don’t say which part."

Dorothy Parker: "That woman speaks eighteen languages and can’t say ‘no’ in any of them."

George S. Kaufman: Once when asked by a press agent, "How do I get my leading lady’s name into your newspaper?" Kaufman replied, "Shoot her."

No Fluff has created a geek version of this same phenomenom: the speaker's dinner. On Saturday night, the speakers go somewhere and eat, and every one of those gatherings ends up being the most fascinating coversation you can imagine. All these brilliant guys, gathered to talk about what they've been thinking about all week that they can't discuss with their spouse. I've had more revelations over food during the last year than I probably had my entire life leading up to this year. So, while I'm glad to spend some time at home, I can't wait for the next gathering of the No Fluff Round Table next year -- I'm sure that we will all have several months worth of pent-up discussion just waiting.

Wednesday, November 30, 2005

One of the Cool Kids Now

I almost did this about 4 months ago, until Apple announced the Intel partnership. That froze my decision, thinking that I could hold out until the Intel PowerBooks arrived. But, because of this project (which I will talk about more in a future post), I needed it sooner rather than later.

My finger was literally hovering over the green "Buy" button on the Apple web site, but my spidey sense told me not to click yet. The very next morning, I went to the site and was greeted with the "New Powerbooks" banner headline. Because I waited the extra day, I got the video card upgrade for free, a higher resolution display, and generally cheaper machine for the same configuration. I saved enough to pay for the extra 1 GB of memory from Crucial.

I've spent the last couple of weeks getting used to the Mac, and it's a joy. Unfortunately, I now travel with 2 laptops (the ThoughtWorks issued Dell Latitude 610, which I need for work, particularly .NET development) and the PowerBook for everything else. But, it's a small price to pay to hold my head up high when I'm hanging out with the other No Fluff, Just Stuff speakers (mostly Mac guys because they can). When I write Java, I'm living the dream: using IntelliJ on a PowerBook. At least for the short term, my hedonic adaptation is sated.

Wednesday, November 23, 2005

In Praise of JBoss at Work

My only complaint is one that Scott also laments: lack of testing code. But, I understand that the book would be much larger if they had incorporated testing. In that regard, they did the right thing: the book is about JBoss, not about testing in the J2EE space (a topic worthy of a large number of books all by itself). Kudos to Scott and Tom for a great book.

Wednesday, November 16, 2005

Art in San Francisco

Monday we planned for the rest of the week. Then, on Tuesday, we went to a concert at The Fillmore (mostly just to see the venue). We saw Missy Higgins and Liz Phair, both of which were entertaining. Wednesday, we went to a matinee of the Tennessee Williams play Cat on a Hot Tin Roof. Wednesday night, opera at San Francisco opera (Fidelio, Beethoven's only opera). On Thursday, we went to SF Museum of Modern Art (one of my favorite museums), then went to dinner with friends and went to a heavy metal concert at Slim's (Fu Manchu).

During the week, I also gave 4 talks and a 4 hour pre-conference tutorial, and I got some work done as well. Mostly what we didn't do was attend any of the other sessions! All in all, a great conference week.

Tuesday, November 01, 2005

No Fluff, Just Stuff Does .NET

But a funny thing happened in Austin: he was right! Suddenly, my ace game was barely adequate. I distinctly remember telling co-workers when I returned that I felt like a kid eating at the adult's table. Jay had (and has) managed to create an extraordinary gathering in every city he visits. I have perspective on this because I've been to lots of conferences. Maybe it's the weekend seclusion, or the longer than average sessions, or the high level of discourse in the session which spills into the hallways, the meals, and to everyone you meet. It's all those things, with one more keystone ingredient: the speakers. I am honored and humbled to be considered a part of this extraordinary group of individuals: the most brilliant minds in the industry, genuinely personable, gregarious, funny, and centered. Jay has created a work of genius, gathering this group to talk about technology 27 weekends a year.

Until now, unless you were a technology switch hitter, you had to be in the Java (or, increasingly, Ruby) crowd to even know that No Fluff, Just Stuff existed. Now, Jay is expanding this phenomenon to the .NET world. December 2nd begins a new era for .NET developers: you get your very own No Fluff, Just Stuff. Ted Neward is running this show, and it is his considerable burden to re-create the pure magic of No Fluff, Just Stuff in the .NET space. If anyone can pull it off, he can. A new group of speakers, a new set of cities, and a new chance to create a community revolving around the most unlikely of campfires: a computer platform. There are a few of us participating in both worlds (myself, Ted, Glenn Vanderburg, Stuart Halloway, and a few others). It will be our job to set the tone for the new speakers to create the same but different ambiance for a new crowd.

Every so often, an event happens that you simply cannot miss, and, for .NET developers, this is the Beatles in Shea Stadium, Elvis on Ed Sullivan, and Johnny Carson's last Tonight Show. The premiere. One weekend only. Denver, December 2-4, 2005. The first of many, many No Fluff, Just Stuff.NET shows. You have to see it to believe it.

Monday, October 31, 2005

Technology Snake Oil 7: Demoware

Of course, every real developer knows that you can't do real development like that. In fact, most developers now (I hope, at least) know to create layered, modular applications so that they are maintainable, updateable, and a bunch of other "-ables". But this sea change hasn't permeated most managers yet. They still purchase development tools (on behalf of developers) based on 15 minute technology demonstrations. I firmly believe this is one of the reasons Sun created Java Studio Creator for JavaServer Faces. They were getting killed in demoware sessions against Microsoft's ASP.NET story. Now, Sun can compete with Microsoft on creating useless toy applications in front of a crowd. And they say there's no such thing as progress!

This hurts development on several levels. Real developers are frustrated because their managers develop unrealistic expectations for the rate of development. Managers are frustrated because they can't figure out what their developers are doing for the weeks and weeks it takes them to build what looks on the surface like the toy application they saw someone build in front of them. And both groups are irritated at each other.

I'm not against productivity tools (I'm not a "use-linux-and-Emacs-for-everything-everyone-else-is-a-wimp" kind of Stallman-esque developer. I use IDE's and appropriate tools, including some of the aforementioned RAD tools, although not in the manner suggested above. In fact, I have a tool fetish that I must constantly fight (you know, Emacs is pretty darn powerful in a lot of circumstances). I am against vendors who try to seduce managers and other non-techies with shiny objects that don't really deliver the promise of the snake oil they sell.

Friday, October 28, 2005

Technology Snake Oil Part 6: Drowning in Tools

The problem we have in the development world is that we are drowning in tools. The “bit rot” problem in Windows is well known. The more software you install, the more likely there will be conflicts between components, the slower it gets, and the flakiness level rises. I reached an interesting milestone recently. I had the opportunity to build my development laptop completely from scratch (no ghost images, everything installed in pristine state from CD's). Anticipating this momentous occasion, I compiled a list of all the tools that I thought I couldn't live without. Many of these tools are very special purpose (like WinMerge), many of them are open source, but they are all tools that I use, maybe not daily, but at least sometimes. Once I got everything installed, I started getting the kind of flaky behavior symptomatic of a bit-rotted Windows machine. I had reached a milestone: my basic working set is incompatible with Windows.

This led me to pare way down on the tools I use. Every tool has to defend its life, and make the cut to survive. I gave up some stand-alone editors (I have a text editor fetish, and formerly had a bunch of them, each for one or two things they did really well). Most of those chores have moved over to Emacs now. OK, and Notepadd++. And Crimson Editor. But that's it! I promise! I’ve been actively trying to cut down my working set.

Developers love software, and tend to accumulate a lot of it. In the pathological case, developers can spend more time installing, uninstalling, and trying to get integrations working between tools that they have no time left for productive work. I have to fight this tendency in myself to try new tools, because each one represents a shiny new object that will, according to its web site, be faster, lighter, more aerodynamic, and brighter, a floor wax and a dessert topping. Using an over-worn analogy, we have an entire wood working shop filled with expensive, gasoline powered, multi-purpose saw/drill/lathe/turnip twaddlers.

Instead of monolithic tools, we need very cohesive, modular tools. The Unix guys got it exactly right – create simple command line tools that follow a few conventions (like all input and output it unadorned text). They created independent, cohesive modules of behavior. Once you understand how these modules work, you can chain them together to achieve what you want. They even built in integration tools, like xargs (which lets you massage the unadorned text output from one program into the expected format of another). Without question, someone who understands how these tools work is more productive (with fewer headaches) than someone struggling to use a behemoth like Software Delivery Optimization. Why are the command line tools better? You can script them in nice clean ways, and let the computer do work for you. One of the unfortunate trends with graphical environments is the dumbing down of power users. If you watch how power users in the Unix world work vs. typical power users in the Windows world, the Windows guys (on average) are working a lot harder, performing a lot more repetitive tasks. We need a woodworking shop filled with simple hand tools, not more electricity and kerosene. Throw yourself a life preserver; download cygwin today.

Monday, October 24, 2005

LOP Weekend

I was afraid that everyone would be sick of this topic by the time Sunday afternoon rolled around. However, I had a good group for both parts of the talk. Several people asked me during the day if it made sense to come to the second one if they didn't come to the first, and I assured them that it was OK. The first part of the talk was up against one of Keith Donald's Spring talks, which is tough competition this year at No Fluff. The first part of the talk replicates the material in the keynote, but at a much higher level of detail. The second part of the talk is designed to be more practical. I show some interaction with JetBrains Meta-Programming System, but mostly the 2nd part encourages discussion about the viability and future of LOP. We had a great discussion, including problem domains that match LOP early adoption and what developers are already doing in this space. One of the more active attendees works for a really, really big company here in Atlanta, and he stated that LOP is currently number 4 on their list of hot technology trends to watch (no, I didn't ask what the other 3 were). He was an evangelist within his company for this evolution, so that's why he was so involved in the discussions around it all weekend.

One of the best things about discussing a topic like LOP at No Fluff conferences is the number of people that you meet that are already doing this. The general level of the audience at No Fluff continues to amaze. For something new like LOP, No Fluff provides a valuable traveling road show to meet and greet the folks who represent islands of cutting edge technology (who generally are just looking for a kindred spirit for discussions). Last year, one of the attendees commented that the best thing about No Fluff is that he could talk about work and everyone around him knew what he was talking about!

Thursday, October 20, 2005

Chattanooga Choo Choo

Chattanooga Java Users Group meeting. This is interesting because the

trip from Atlanta to Chattanooga takes me back through my home town of

Dalton, GA. I was contacted a few months by an alumni from Dalton

Junior College (where I got my associates degree is physics) who

recognized me from an article or something and asked me to speak at

CJUG. So, instead of getting on a plane to speak at a JUG, I'm driving

for 2 hours.

This will be an interesting blast from the past for me. Chattanooga is

only about 20 miles north of Dalton, so I spent a lot of time there

growing up. One of my summer jobs was in Chattanooga. And, it gives me

a chance to stop by and see family on the way back. Today will be a

misty, rose colored memories kind of day for me. Oh, and then I have

to talk about JavaServer Faces! A harsh plummet back to reality!

Tuesday, October 18, 2005

Slashdotted!

Ruby for Java Developers

My article on the Ruby Developer's Kit has just been published by the IBM Developer Works site. The RDT is not a full-blown IDE for Ruby yet, but it does have excellent support for debugging Ruby code, which is a huge win for me. I've debugged some pretty hairy Ruby code in it (including code that does automation in Windows) and have been very happy.

See, I'm trying to find a way to bind the Java and Ruby communities together. Can't we all just get along?

Monday, October 10, 2005

Missing the Point

Is that the right message to send out to the world? Is raw developer productivity for mindlessly simple applications buying us anything? The only people impressed by this type of demo-ware are non-technical managers, who have budgets. "Wow, if a 9 year old can build an application in 9 minutes, just think our developers will be building our entire corporate infrastructure! We must buy this tool!". I've posted my thoughts on RAD before. It is disappointing to see a company with so many sharp people pushing 2 aspects of software development that I think counter productive.

Not everyone in Borland feels this way. I'm corresponding with several Borlanders about how they can support agility in their Application Lifecycle Management (ALM) tools. The tide is turning. I am not naive enough to think that they should just ditch their product base because of what is still just opinion. I just hate to see what I consider poisonous messages to developers and their managers.

Tuesday, October 04, 2005

Dexter

This has changed somewhat with Dexter. I was pretty impressed with what I saw. As a development tool, it is approximately on par with Visual Studio now in all but stability (beta testers tell me it is still pretty scary). Microsoft has the luxury of beta testing VS for 2 years; Borland cannot afford even a fraction of that. Still, the environment looks pretty good. It finally has a reasonable number of refactorings available (Delphi 2005 had 4, and from the reaction of the Delphi faithful last year, you would have thought that Borland had invented cold fusion). In my estimation, it is about 70% of a real IDE now. It is still hosted on Galileo, but it has greatly improved over the last year or so. The same IDE supports both Win32 and .NET development and has all the Borland Secret Sauce components. The next release, due out mid-next year, will even support .NET 2.0. Still not compelling enough to get anyone to consider it as an alternative to Visual Studio for new C# development (unless you are deeply immersed in Borland's Application Lifecycle Management integration strategy) but at least it isn't an embarrassment now.

Monday, October 03, 2005

How Important is Syntax?

development tool. I was speaking at the Entwickler Conference

("Entwickler" is German for "developer") in Frankfurt, talking about

Java and .NET. This conference is ostensibly a general developer's

conference, but Borland is one of the main sponsors and it has

traditionally been the European gathering place for Delphi developers.

The faithful only get one Delphi focused conference per major land

mass, so they all come. The Java and .NET guys can get information

anywhere. Probably 70% of the talks at this conference either focus on

Delphi or overlap significantly (for example, I did an "Updated

Design Patterns in .NET " in C#, but the material also applies to

Delphi.NET).

The interesting part of my trip were the discussions between sessions,

at dinner, and in the halls. Even though Delphi is a niche development

tool with a shrinking market, the people at this conference are

unusually passionate about their tool. I sought to understand this

blazing exuberance. I traverse several different languages and tools

pretty regularly (Java, .NET, and Ruby), and to me they are just tools

-- each has its own strengths and weaknesses. I also used Delphi

pretty much exclusively for about 4 years (and wrote one of the first

Delphi books), so I understand the tool and language. But I've never

gotten so vein-bulging-in-the-forehead excited when someone told me

they thought something else was better.

This crowd has its back against the wall: Borland's clear future

direction with Delphi is to make it Yet Another .NET Language

(YA.NL?). The last couple of versions of Delphi have supported both

Win32 and .NET capabilities (and most of the developers in Germany are

still doing Win32 applications). In my opinion, this marginalizes

Delphi to also-ran status with C# and Visual Studio. Sure, if you are

building in Delphi now and have a bunch of code, you'll stick to Delphi

(maybe). But if you are starting a new project, there is no way you

would pick Delphi over C#. The IDE isn't any better (and it is less

stable) than Visual Studio, and it will not have a version that

supports .NET 2 until mid-next year.

Tiptoeing around the fanatics, I asked a few people if the above

assessment is true. "No! The next version of Delphi will win converts in

droves!". At the end of the day, it boils down to which syntax do you

like (if the tools are at about parity and the framework is the same,

you are down to begin...end pairs vs. curly braces). I've always had an

easy time switching syntaxes, both between similar (Java and C#)

languages and very different ones (Java and Ruby). I asked some of

these folks: "Is the syntax of the language really that important to

you?" I got a resounding "Yes!" from several people. They are willing

to use a third-world development tool just so they get to type Pascal

all day instead of C#. Just like all marginalized peoples, they

overcompensate anytime someone like me desecrates the object of their

devotion.

You see some of the same fervency in the Ruby and Lisp crowds, but they

are genuinely different from their peers. The Delphi vs. C# crowd are

supporting the same platform, with different syntaxes. Would you be

willing to jeopardize your job over the syntax of a programming

language? I still do not get it.

Monday, September 19, 2005

Swiming Up the Waterfall

A fellow co-worker and I found ourselves in a client site who was a big believer in my most favorite of dysfunctional of methodologies, Waterfall. We were hired to help them design an advanced new system (that, of course, interacted with their still very active Mainframe development) with innovative technology. My coworker and I showed up with great enthusiasm (because we didn't know what was going on yet).

Here is what we were tasked to create: First, we need a Functional Design document (a technical-ish set of requirements) to pass off to the business unit to get approval for the project (which was already approved -- that's why we were there). It took man-weeks of effort to produce this utterly useless document, which was created by emailing Word documents around, because they don't put design documents in version control (What? Did I hear that right? Why not? "We just don't" “No, why?” “If it’s in version control, others can see it”). OK.

Next comes the Technical Design Document, another multi-man-week project, where we take the Functional Design and produce a Technical Design, meaning that we finally get to reveal all the technical decisions we made while writing the Functional Design but couldn't put in that document. And we can't produce "prototypes", because that's a bad word -- business people want to see prototypes run. "Can we call them spikes? Sure, no one knows that that means". A few times, I thought my coworker's head was actually going to explode. We set up our own secret version control system and starting writing our design documents (oh, sorry, Design Documents) using XML so that we could diff them, and converted them to Word documents when the big flurry of Consolidating the Documents happened.

Then we made a decision. Given the amount of time that we had to create the Technical Design Document, we could just write the code, using XP techniques (test-first coding, iterative design, etc.) and produce the design document based on the working, tested code. And that's what we did with part of the system. We kept this under wraps (after all, we're supposed to be designing, not coding) and made good progress. To show the quality of the code, I implemented code coverage using Cobertura so that we could demonstrate that the unit tests were actually exercising the code. With just a couple of days left in the Technical Design phase, we were asked to provide estimtes as to how long it would take to implement our design. That's when we let the cat out of the bag: "Do you want us to tell you how long it would take, or how long we have left? We can give you really good estimates because it's already done". We showed them the implementation and the unit tests, along with code coverage (96% code coverage, 100% branch coverage) . The Technical Design document that was already based on working code.

They weren't upset, but weren't particularly happy either -- just kind of stunned. This way of writing software is so foreign that they have no perspective on it. They've never seen code with unit tests, or code coverage, nor any code that was produced in an iterative way. You are supposed to spend months on design documents, then write the code, right? How is it that you can not do all that? Instead of the elation that we expected, we just got a weak "Good job, guys", and they took their working code and incorporated it where it was supposed to go. We were finished with our tasks, so we left.

But not without planting some seeds. You see, we involved some of their other programmers in what we were doing. We didn't code with them, but explained how we were going about it. And we showed them unit tests (which they had heard of) and code coverage (which was new). The other developers clearly saw the benefit of what we had done. Sometimes, you can't dig a deep hole all at once because you have to move too much dirt. But a river can dig a deep hole a little at a time, eroding away grime and stone bit by bit. Even if we didn't dig a deep hole, maybe we created a rivulet.

Thursday, September 15, 2005

Technology Snake Oil, Part 5: The Integration Myth

Recently, another example popped up from an unexpected place. It turns out that there is a bug in my beloved IntelliJ and WebLogic, but only when you are doing distributed transactions and messaging. My colleague spent the better part of a day trying to resolve that little nasty bug.

The snake oil hiding in the shadows here is the promise of integration sold by tool vendors. IDE’s try to encompass more and more. Visual Studio has always been successful with this (especially if you use all Microsoft technologies) without achieving a truly great code experience. Visual Studio.NET 2003 is a third world country compared the scarily intuitive IntelliJ, which is the Rolls Royce of IDEs. VS.NET 2005 is better, but still not up to IntelliJ’s standard. Borland tried to take this to the extreme conclusion with its Software Delivery Optimization suite, which tried to bind together requirements gathering, version control, coding, deployment, and monitoring into a single environment. This vision yields an awesome productivity gain as long as the integration is flawless. However, integration at that level is never flawless, meaning that you spend as much time (and considerably more frustration) trying to figure out why something that should be working isn’t, only to find out that it actually is working but the integration is obscuring the results.

Some integration is good – that’s why we have integrated development environments. However, there is a fine line where vendors try to go too far and end up lessening productivity rather than enhancing it. The problem is that tool vendors are trying to create monolithic environments. I suspect that Visual Studio in all its incarnations may be the culprit here – everyone is trying to replicate that environment. Finding the fine line between integration vs. stand-alone tools is tough and going to get tougher, as vendors produce more and more immersive environments to get you to buy into their integration strategy.

Someone has done this exactly right. In the next Technology Snake Oil (Drowning in Tools), I’ll talk about that.

Monday, August 29, 2005

Technology Snake Oil, Part 4b: Prescriptive Approaches in the Large

Following this line of reasoning, I think that the PetStore was one of the worst evils inflicted on the young, innocent, fawnlike Java Enterprise Edition. It was supposed to be a catalog – “Look, here are all the things this shiny new technology can do” – and people took it as a prescription. Rather than sit back and determine for what EJB was suitable, developers dove right into using them in their projects because the prescription said so. EJB was (and is) suitable for only very specialized types of applications. If you were writing a real pet store application (even back when PetStore came out), you would be crazy to use EJB!

The pattern repeats over and over, with technology (“Everyone is using Struts, so we should be too”) to methodologies to products. You can bet that the SOA vendors are salivating over the prospect of creating the prescription that says “Everything must be decoupled, document centric messages – here, we have an expensive product that just happens to do this!” As much as it hurts, you have to make determinations for yourself on which technology (methodology, framework, tools, etc.) are best for the situation you are in right now. There is no prescription, no matter what ersatz technology doctors tell you.

Friday, August 26, 2005

Joel is Plain Wrong about XP

As I worked through the screens that would be needed to allow either party to initiate the process, I realized that Aardvark would be just as useful, and radically simpler, if the helper was required to start the whole process. Making this change in the spec took an hour or two. If we had made this change in code, it would have added weeks to the schedule. I can’t tell you how strongly I believe in Big Design Up Front, which the proponents of Extreme Programming consider anathema. I have consistently saved time and made better products by using BDUF and I’m proud to use it, no matter what the XP fanatics claim. They’re just wrong on this point and I can’t be any clearer than that.

Joel has an interesting perspective on this subject and has written quite eloquently on this and other topics (I highly recommend his books and blog). However, I think he’s just wrong about this. He has a skewed view on software development, having worked at product companies his whole career. Building products is a lot different from building business applications (which is where my experience lies). First, for products, the requirements and design are pretty much made up of a few people’s vision on what the product needs to do. The market place determines if they were right or not. Second, you generally have to do this in secret, especially if you have competitors who will cherry pick your design. Third, you are pretty much required to do a “big bang” deployment, when you ship to customers.

Most business applications aren’t like this. The requirements are very fluid (quite often, the business stakeholders don’t know what they want even well into the process). When done correctly, the process is collaborative across business units and developers, making for a stronger product. Last, you can roll out iterations (even if just to QA and/or acceptance testing) without the big bang.

Joel is using the straw man arguments against XP. Many misinformed naysayers against XP assume that we never think about what we’re doing up front. Of course we do. This same group assumes that there is no coherent architecture for complex XP projects. Just because we’re doing agile development doesn’t mean that we just sit down and hack out code with no planning or forethought. Believe me – I’ve had lots of experience building business software, and Big Design Up Front just plain doesn’t’ work, even when it is done well (I’m fighting it yet again even as we speak). XP delivers better quality software, in shorter times, closer to the ultimate target, which has a fighting chance of actually usefulness.

Taking this topic to the logical conclusion, I wonder what would happen if a product company did a full-on agile approach? Building the product based on custom feedback from the early iterations. You would need a patient customer base, willing to comment on the work in progress. I can’t help but believe that, just as in the business software world, you would end up with a better product. How many features could you eliminate in something like Word if it had been developed incrementally? So much useless cruft could be removed from commercial software if product teams listened to their customers while developing the software rather than the complaints after it is done.

Some companies are tiptoeing in this direction. Check out JetBrains Early Access Program for innovative tools like Fabrique and MPS (The Meta Programming System). I think these products will be extraordinary if they can get their target audience to give them feedback. Agile product development – what a concept!

Monday, August 22, 2005

My Blog, in Korean

I'm an Apache Committer who is still on the way of studying English. I'm trying to level-up my English skill translating great blog entries from great authors.

I think that's more than a bit generous, but I'm gladly supporting this effort because, hey, it isn't costing me anything and it spreads my meaningless opinions all over the world. What an unbeatable combination. Seriously, I appreciate the hard work Trusten is doing for this, and I hope that my dribbly writing is worth translating.

Now, I’m really interested to see if he translates this blog entry, creating a kind of recursive blog referencing stack overflow.

Thursday, August 18, 2005

Technology Snake Oil

The world is a much simpler place if you can just coast through it, without having to expend precious mental energy. In fact, it seems widely encouraged in many organizations: if we can prevent our employees from thinking too much, they will stay out of trouble. Unfortunately, to build insanely great software requires thought on many different levels, which doesn’t go well with prescriptive approaches.

You see all sorts of prescriptive approaches in software development, both large and small (this entry covers small, the next Snake Oil piece covers large). Small prescriptive manna floats down in many different guises, mostly in a good context like design patterns and best practices. Many developers take these nuggets out of context and start believing that this Prescription for building all types of things represents the One True Way (without bothering to apply additional thought). Don’t think that I’m denigrating design patterns – they are clearly a good thing, as a way to catalog common patterns. The problem comes in when they are used without thinking.

Here is a classic example that appears all over the Java code I see every day. Java doesn’t really have a

struct-type thing, which is just a holder for data. In Java, everything is a class, which is OK. Nevertheless, I see developers who just need to pass composite data around creating a Java class with accessors and mutators that won’t every be used for encapsulation. It has become a common prescriptive practice in Java to create the class, create private member variables, and generate getters and setters for them without remembering why you create accessors and mutators. If this class is never going to have any behavior attached to it, if it is a simple container for information, you don’t need the accessor/mutator pair or the private scoping – make them public. If you really need to add behavior later and/or encapsulation, you can refactor the class into a real class, not just a composite data type.The real sermon here is to think about why you create things, don’t just blindly create artifacts. Yes, encapsulation is generally a good thing, but isn’t required in every single thing that looks like a class – sometimes it’s really just a

struct. You must always be diligent about why you are creating what you create.

Wednesday, August 17, 2005

Fighting for Your Life

I was almost voted off the island recently.

Because of some scheduling issues involving me rolling off a project, another one starting, and the slated person still a week away of rolling of his project, I was in the unfortunate position of going to a client's site for just 1 week, at the start of a new project. The first day, I arrived late because of travel details, and we spent the day going over the technical details of what it was we were going to be doing. All fine and good. In fact, one of the overviewers was kind enough to identify something he thought I could do in just a week, something orthogonal to the rest of the work.

The next day I get to work and the client supervisor asked me into his office. "Neal, we just don't think the one week thing is going to do us any good, we like you and all, but you should just go ahead and leave." He was trying to kick me off the island! The last thing he said was "Unless you can think of a good reason we should keep you..." That was my chance. I said "Well, of course, it is your decision, but I have found something I can get done in a week", and went on to tell him about the one-off sub-project we had identified the day before in technical detail. He listened to my case, then called the technical lead into his office and had me repeat it. The tech lead said "Well, we do need that piece, and I think he can get it done in a week". The supervisor turned to me and said "Alright, get back in your office and get to work!". Saved!

And it turns out that I was able to get the work done in a week, and the client requested that I stay on instead of the guy originally slated for the gig. How is that for defending your life?

Friday, August 12, 2005

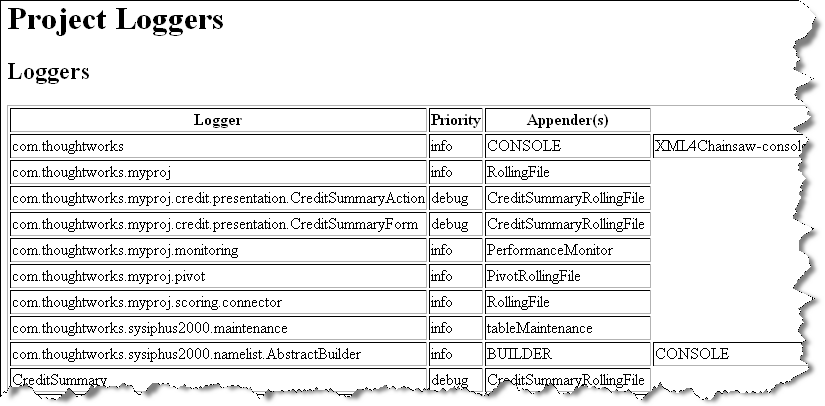

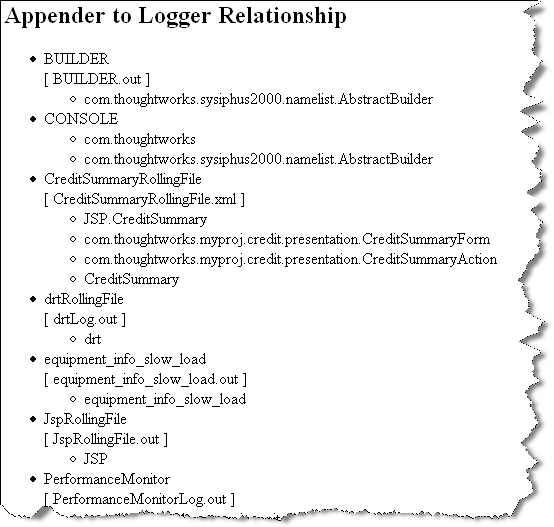

An Actual, Real, Everyday Use for XSLT

I have played with XSL and XSLT for a while, and even toyed with the idea of basing my whole website on an XML+stylesheet vision. Because XSLT can get complicated, that idea died before its time. However, I recently found a really good use of XSL on a development project on which I was working. This project included the ubiquitous Log4J library, including a largish logging.xml document. My job was to make sure this document is still relevant. The problem with trying to analyze such a document lies in the way that items reference each other. Instead of pulling my hair out bouncing around in the XML file, I created an XSLT stylesheet to format the whole thing into HTML, with links from loggers to appenders and appenders to loggers. The end result looks like this (broken into 2 pieces for space savings):

The stylesheet that generates this info from the XML document is not long or complex:

<?xml version="1.0"?>

<!-- XSL Stylesheet to make it easier to determine the relationshps between -->

<!-- the loggers and appenders used in the application. -->

<!-- This stylesheet is automatically linked into the logger.xml document. -->

<!-- If you are using any reasonable browser, this stylesheet should be -->

<!-- automatically applied to this file. -->

<xsl:stylesheet version="1.0" xmlns:xsl="http://www.w3.org/1999/XSL/Transform"

xmlns:log4j='http://jakarta.apache.org/log4j/'>

<xsl:template match="/">

<html>

<head>

<title>

Loggers

</title>

</head>

<body>

<xsl:apply-templates select="log4j:configuration" />

</body>

</html>

</xsl:template>

<xsl:template match="log4j:configuration">

<h1>Project Loggers</h1>

<h2>Loggers</h2>

<table border="1">

<tr>

<th>Logger</th>

<th>Priority</th>

<th>Appender(s)</th>

</tr>

<xsl:for-each select="category">

<xsl:sort select="@name" />

<tr>

<td><xsl:value-of select="@name" /></td>

<td><xsl:for-each select="priority" >

<xsl:value-of select="@value" />

</xsl:for-each></td>

<xsl:for-each select="appender-ref">

<td><xsl:value-of select="@ref" /></td>

</xsl:for-each>

</tr>

</xsl:for-each>

</table>

<h2>Appender to Logger Relationship</h2>

<ul>

<xsl:for-each select="appender">

<xsl:sort select="@name" />

<tr>

<li><xsl:value-of select="@name" /></li>

<xsl:for-each select="param">

<xsl:if test="@name = 'File'">

[ <xsl:value-of select="substring-after(@value, '${logging.home}/')" /> ]

</xsl:if>

</xsl:for-each>

<ul>

<xsl:call-template name="show-categories">

<xsl:with-param name="appenderName"><xsl:value-of select="@name" /></xsl:with-param>

</xsl:call-template>

</ul>

</tr>

</xsl:for-each>

</ul>

</xsl:template>

<xsl:template name="show-categories">

<xsl:param name="appenderName" />

<xsl:for-each select="//category">

<xsl:for-each select="appender-ref">

<xsl:if test="@ref=$appenderName">

<li>

<xsl:value-of select="../@name" />

</li>

</xsl:if>

</xsl:for-each>

</xsl:for-each>

</xsl:template>

</xsl:stylesheet>

I also added a reference to this stylesheet in the logger.xml document itself:

<?xml-stylesheet href="ShowLoggerRelationships.xsl" type="text/xsl" ?>

The beauty of this is that any reasonable modern browser will automagically apply this stylesheet anytime you double-click on the XML file. This is the ultimate form of documentation -- generated directly from the source in real time. Any changes to the relationships in the logger.xml document are instantly shown when you look at the file. If you still want to see the raw XML, you right-click and "Open With..." instead. This makes the browser view of the XML document useful without hiding or obscuring the functional elements. And is a good example of the DRY principle (Don't Repeat Yourself) from the Pragmatic Programming applied to documentation.

Wednesday, August 10, 2005

Technology Snake Oil, Part 3: MDA

The only real success stories with MDA are for very limited problem domains, like embedded software. Here's why: consider the cash register at McDonalds. The number of items is very limited, and the number of things you can do with them are very limited. This is well suited to a pictorial (in fact, an ideogrammatic) representation. That's because the concepts on display are retricted to morphemes, which in linguistic terms represents the smallest language unit that carries a semantic interpretation. Thus, it's easy to create a McDonalds cash register using just pictures and make it a quite effective solution to the food ordering problem.

For complex problems, you can't break down the problem to simple morphemes -- imagine the semantic complexities of a concept like "loan" or "payment". For a problem domain this rich, you need the richer representation of a full-blown language (which the Egyptians also discovered -- they were the last MDA success story, and they eventually abandoned it!). Trying to cram rich semantics into a pictorial representation so that you can create software that abstracts those semantics is absurd.

In fact, trying to cram the entire world into a strict tree-shaped hierarchy isn't much better (see Programming, Object-oriented). At least OOP has more flexible semantics, and we keep forcing solutions to this hierarchy problem with inventions like aspects, which cut across the tree-shaped world we've created (because stuff in the real world cuts across trees). So, where do we go from here?

Let's solve it the same way mankind (including the Egyptians) did -- create language! Language is ultimately the only way to handle the rich semantics of the world around us because that's what the people stating the problem domain use to state the problem. Which is why I'm so geeked about Language Oriented Programming -- building domain specific languages that are as close as possible to the problem we're trying to solve. LOP done right encapsulates the low-level details of a language like Java, allowing developers and others to work at a higher level of abstraction. We've been mired in curly-brace languages too long -- we need to upgrade our abstraction.

MDA is the wrong direction for abstraction upgrade. It tries to further restrict our expressiveness, believing that the entire world can be graphed and you can create real software this way. Imagine for a moment the MDA diagrams (including Object Constraint Logic) for your average J2EE enterprise application. You can build it in code, with the crude semantics of curly-brace Java in 1/3 the time you could model it to the point where you can click the "And Then a Miracle Occurs" button to generate the application. MDA might work for simple, semantically unique domains, but no where near the real world.

Monday, August 08, 2005

Happpy with What You Have to be Happy With

Recently, I did a 3-hour tutorial workshop at the SOA conference in Singapore entitled "Agile Development and SOA", where I talked about aspects of Agile development as it applies to SOA type projects. Mostly, it was an excuse to talk about Agility in a foreign land.

- Do you ever miss deadlines? Oh, yes

- Are your user's happy with what you produce? Well, no

- Are your developers frustrated by vague requirements, which leads to user dissatisfaction? Yes

- Do you ever accidentally break code in your "big bang" deployments, which takes a long time to fix? Yes

I've blogged about this phenomenon before -- why is it that developers and managers believe that all forward progress in methodology stopped in the mid-70's and all language development froze about the time Objects appeared? Is it just a non-technical person's fatigue at trying to keep up, so an arbitrary milestone was created beyond which "I won't think about it anymore?" Are developers so overwhelmed that they just shut down too, and keep working on what's in front of them even though better things abound?

Friday, August 05, 2005

Technology Snake Oil, Part 2: RAD

Then a funny thing happened to the developers on the way to this astounding productivity. They learned the tools and dutifully dragged and dropped their way to building applications...and it was good. Until, that is, they figured out that building the application is the short, easy part -- now they have to maintain the monstrosity they've created. RAD development doesn't scale well, and when you build large and very large applications with it, maintaining those applications is a nightmare of searching for the scattered crumbs of code scattered hither and yon. Working for a while in this environment is what made the Design Patterns book really hit home -- the kind of code we were creating was entirely anti-pattern, and more about "how fast can it be created"? The maintenance nightmares led me, as CTO of a consulting and development company, to figure out new ways to use the RAD tools we had to use (because of our clients) that did follow good design principles. What we found is that the tools fight you when you try to do the right thing. RAD Development tools have a path of least resistance, which makes things very easy while you stay on the path (and use their wizards and other support structures). Stray from the path, and you find obstacles at every turn. But, it's worth fighting through them to get to a place where you can create maintainable code -- and you find that you are no longer doing Rapid Application Development, just Application Development.

RAD is now rightfully deprecated in most serious software development efforts. Even serious developers using RADish tools like Visual Studio are avoiding the RAD pitfalls. Some developers still fight with RAD tools, and think that maintenance is just always extremely difficult in big projects. I'm still shocked by the developers who have still never heard of Design Patterns and who still work on Sisyphean development chores.

Which is why I think the RAD aspect of Ruby on Rails misses the point of what makes Rails interesting. RAD doesn't work for large, complex projects, no matter what technology. The interesting part of Ruby on Rails is the effective use of Ruby to build a domain specific language on top of the underlying Ruby language that makes it easier to do web applications with persistence. It is more advanced than the RAD tools of old because you can drop to the underlying Ruby language and get underneath the framework when you must -- and that's a powerful idea. But, building complex applications in it still requires real thought. Maybe the breathless amazement of the Rails community reflects the absence of effective RAD tools in this space before. Don't get me wrong -- I think that Rails is cool, just not for the reasons touted by so many Rails-flavored Kool-aid carriers.

Wednesday, August 03, 2005

Technology Snake Oil, Part 1: UML

Some of us craggy old-timers remember the time before UML, when we had Booch, Rumbaugh, and several other competing diagramming notations. The Holy Grail was going to be the Unified Modeling Language, which would solve all our problems and finally give us software developers a universal language, for everything from use cases through structure to deployment. And that's what we got. A classic example of "be careful what you ask for". UML feels like a compromise between a group of people, and it's so general that it's almost useless. The standards bodies keep bolting on new stuff to try to get it to the point of basic usefulness, but it just makes it worse. Does anyone really use Object Constraint Language to decorate their diagrams so that they can generate code that Just Works? I have never seen a case where you couldn't get it done faster by writing the code, then reverse engineering it into one of the expensive tools. Of course, it's good to design things before you code them, but a white board is much better. Combine the nature of UML with the fact that you can't ever show its diagrams to business analysts or end users because it is too obtuse, and I end up doing most of my architecture and design work in Visio (and I'm no fan of it either). It creates diagrams you can show everyone that capture the essence of what you are doing just before you implement it.

I'm not just a tourist in the UML and RUP world -- the company I worked for in the dim and distant past used RUP, and we foisted Heavy Duty Object-oriented Analysis and Design on clients, and used it ourselves. Why do you think I'm so militantly agile now? It just flat doesn't work for 90% of the types of projects that real developers write. And it's Bastard Child Model Driven Architecture is featured in an upcoming Snake Oil entry...

Tuesday, August 02, 2005

SOA (Service Oriented Aberration)

Of course, the opposite is also true (and, alas, more likely) -- companies with poorly defined business processes will try to adapt SOA and fail miserably, creating a case study of SOA failure, when the technology had nothing to do with it. Worse yet, tool vendors of expensive development tools like Enterprise Service Buses will sell their tools to unsuspecting clients as a way to "fix" their business process. Putting a dress on a pig doesn't make the pig any prettier, and it just annoys the pig. SOA, like so many other similar technological breakthroughs (see Programming, Object-oriented and Development, Component-based), won't solve the dysfunction of dysfunctional businesses.

Thursday, July 28, 2005

Conspicuously Caucasian

I don't mind being different (I certainly was in high school, and I was from the neighborhood). However, in Singapore, I'm a target for all sorts of commerce attempts. I can't walk past a store front, even in the tony parts of town, without someone trying to sell me something. I'm not only conspicuously Caucasian, I'm American, and therefore both gullible and rich! I can't get the locals price around here for anything. Just another example of how travel broadens you, and why I like travel so much because it provides perspective.

Friday, July 22, 2005

Battle of the Desktop Search Giants

Lo and behold, Yahoo has entered the fray with their own desktop search tool, based on X1. In fact, it is a free version of X1 that doesn't include the enterprise features. It has a slick Windows-based UI and does a lot of cool tricks. So, I displaced Google and installed Yahoo Desktop Search (PCMagazine also gave it their editor's choice).

Well, after using it for a while, I'm un-installing it and going back to Google. Both are in beta, but Google is much more stable IMO. Yahoo was constantly doing odd things, and seemed to be belly up when I needed it the most. While Google has a sparser UI and doesn't handle as many file types, it is (at least for now, for me) more stable. And, for infrastructure software like desktop search, stable wins the day for me. I'm writing this while un-installing Yahoo and re-installing Google. Maybe once Yahoo turns 1.0, I'll re-evaluate. But for now, I'm switching back to Google.

Thursday, July 21, 2005

Passion Part 2

Life is too short to spend time on activities where our passion is not engaged.I know a lot of people who fundamentally don't enjoy their work. While earning a living is a laudable goal, I think those of us who do really enjoy their work are lucky. Most of my friends who don't enjoy their work envy the passion with which I attack my work. Some of them accuse me of not really working, just enjoying my hobby: that's not true -- it's still work. For my hobby, I would create an entirely different set of software. It still tires me out at the end of the day. But, for the most part, I take joy in the job that I do. I'm lucky.

Tuesday, July 19, 2005

Enjoying the Passion

Both events, last week and in Columbus, is a vivid reminder why I do this for a living and why I work where I do. How many people you know are really passionate about what they do for work, and so actively proselytize it to everyone they meet that can understand it? And in fact actively seek out people who can understand it so that they can talk about it some more? Even the non-techies at ThoughtWorks enjoy it, albeit vicariously.

Thursday, July 14, 2005

Running Tests to Watch Them Fail

Which is why I think that NUnit v2 got it righter (is that a word?) by using annotations to mark the test methods instead of naming patterns. If you misspell the annotation, the compiler complains, which, in this case, is A Good Thing. Improvements are already afoot in the Java world -- TestNG already supports annotations (and JavaDoc tags if you are using 1.4) and several other features, and rumor has it that JUnit 4 will support annotations and some of the other TestNG features as well.

Tuesday, July 12, 2005

Beyond OOP

One of my favorite characteristics of college was the accidental synergy that occurs between classes that you happen to be taking in the same quarter. For example, I took the automata class the same quarter as the compilers class, and there are obvious common elements. Even something as seemingly unrelated as artificial intelligence and philosophy creates a fertile ground for interactions.

So, how are these two things related? Lately, during my constant ruminations about What Comes Next, I've seen an interesting synergy between two separate but related concepts. Language oriented programming, my new favorite geek obsession, is one. It addresses the disconnect between the hierarchical nature of OOP and the rich semantics of language. The other day, I was talking to Obie Fernanadez, a fellow ThoughtWorker, and he is really into RDF and the Semantic Web. He's dealing in hierarchies, but in a different way. I am probably greatly injuring this assertion by my newness to this subject, but the Semantic Web folks maintain that, among other things, the problem with OOP is that it is basically context free. Hierarchies exist, but they don't relate in the rest of the world with other hierarchies -- each inheritance tree is an island. And properties that exist on classes don't have any context outside the class. In the real world, "address" can have separate meanings based on the context -- are we talking a physical place or a inaugural speech? The way our minds work isn't like OOP, where everything exists in a strict hierarchy. We have these "free floating" concepts like "address" that can be attached to the correct context. What's needed is context...and that's what the semantics of language provides that OOP doesn't already have. Now, this week at least, I'm thinking that What Comes Next is related to both language oriented programming, which provides needed context to the structure of programming, and semantic ontologies, which provides global context. These are fundamentally different things, but seems like there is some synergy to be found. More later...

Friday, July 08, 2005

Metaphor Shear

Anyone who uses a word processor for very long inevitably has the experience of putting hours of work into a long document and then losing it because the computer crashes or the power goes out. Until the moment that it disappears from the screen, the document seems every bit as solid and real as if it had been typed out in ink on paper. But in the next moment, without warning, it is completely and irretrievably gone, as if it had never existed. The user is left with a feeling of disorientation (to say nothing of annoyance) stemming from a kind of metaphor shear--you realize that you've been living and thinking inside of a metaphor that is essentially bogus.

The whole essay is a great read, with lots of insights into fundamental ideas about operating systems (if you think this metaphor is good, wait until you read his description of Windows vs. Mac OS vs. Linux as car dealerships). I think about metaphor shear anytime I'm forced down to the guts of computers or operating systems.

Sunday, July 03, 2005

Language Workbench Sample Ported to Java

- I used generics in a few places to clean up type casting

- The reflection stuff is different because that's where .NET and Java differ the most

- I cheated and created simple classes with public fields rather than properties for the target classes to make the reflection code easier

Friday, July 01, 2005

Berg's Chamber Symphony

What makes this music so interesting that you can talk about it for 3 times as long as the piece itself? Well, it would take me over an hour to explain! Here are some examples, though. This piece was written in homage to Berg's teacher, Arnold Schonberg, who developed the 12-tone method of composition. The first theme of Berg's work represents Schonberg, Berg, and Anton Webern (another student) with the motif Arnold Schonberg Anton Webern Alban Berg (using the German alphabet). So, the main theme of the work uses the names of the teacher and students. Another example: the second movement is a palindrome: it plays up to the middle (signified by the piano's only involvement in the 2nd movement) the plays in reverse to the end, using different combinations of instruments so that it's not immediately obvious. There are lots of mathematical features in this music: the first two movements have 240 bars each, and the third has 480 bars.

Needless to say, this is a fascinating piece of music, made all the richer if you understand the context of the time in which it was written and all the ideas that went into it. Many people who don't like modern serious music don't have the correct expectations or context. Just as the movie Memento is different than Casablanca, they are both great achievements. The same is true for serious music.

Thursday, June 30, 2005

Improving Your AIM

David has since blogged about AIM (nice acronym, very managerial) here. He's soliciting for people to help him develop a more or less objective measurement of just how agile a team really is. To quote: "the point is I WANT A WAY to compare the capabilities of teams implementing these [agile] processes." If this topic interests you, wander over to his blog and put your own $0.02 for what categories should be measured.

Wednesday, June 29, 2005

Riffin' at No Fluff, Just Stuff

The same thing happened last weekend at No Fluff, Just Stuff in Orlando. One of the speakers became ill at the last minute, and the organizer had to scramble to find speakers to fill his spots. I filled one of the talks, about fallicies in enterprise architecture, after never having seen the talk and having a whole 10 minutes to look at the slides for the first time. However, the talk came off really well -- I managed to talk for the entire ninety minutes, and got good scores. Because the problem domain was familiar, I was able to extemporize the talk, bringing my own experience to bear. This talk wasn't about tools or APIs, but about common fallacies developers face in enterprise situations. Well, I've seen a lot of that. Of course, I'm sure my rendering of the talk is no where nearly as good as the original and that the stories and experiences I bring are completely different from the original speaker. However, it reminded me of a jazz trumpet player showing up to a gig and saying "'Girl from Ipanema', extra solo after the chorus, and watch me for the finish. A-one, and-a Two, and...".

Tuesday, June 28, 2005

The Next Revolution: Language-oriented Programming and Language Workbenches

Thursday, June 23, 2005

Centralized Help in Eclipse

Friday, June 17, 2005

Windows eats itself?

So even Windows doesn't trust Windows Explorer anymore. Hmmmmm...

Tuesday, June 07, 2005

What You Know You Know, What You Don't Know, and What You Don't Know That You Don't Know

This illustrates, to me, one of the best reasons to have a public forum like a blog. You inadvertently learn all sorts of stuff if you are brave enough to try to teach others what you already know -- including stuff that you didn't know that you didn't know.

Monday, June 06, 2005

Reason # 431 Why You Should Use Cygwin/Bash Instead of the Windows Command Prompt

if ant; then ant runatest -Dtesttorun=com.thoughtworks.logging.AllTests; fi

This runs the default ant task (which builds everything). If the return code is 0, it then runs the "runatest" target. The test runs only if the build was successful. This little trick takes advantage of the fact that ant is smart enough to tell the underlying OS (regardless of the shell) if it passed or failed. The "fi" at the end is the "end if" indicator for bash.

Yes, you can create the same kind of little batchy kind of thing in Windows, but I never think to. It seems somehow more natural to me to think in terms of automation, shell scripts, and command chaining in bash than it does Windows. Maybe it's because bash is far, far more powerful than the command line tools in Windows. I never run anything in a Windows command shell now that I unless I'm forced.

Thursday, June 02, 2005

Notes from Singapore, Part 1

When talking to the natives in Singapore, I and a friend (Terry) heard about Durian ("Stinky fruit"), which is a local fruit. I always ask about local foods from the natives to see what I can try that I haven't had before. It is against the law in Singapore to take a durian on public transport. And it is forbidden to take the fruit inside most buildings. However, several locals at the table said they really liked it. My new mission: try some durian. We ate one night at Newton food court, which had fresh durian, so we got one. Tom, who lives in Bali , is a big fan, so he would eat it if we didn't care for it. Well, they cut it open and Tom insisted that we eat it essentially holding our nose -- the flavor and the smell are only loosely related. So I tried it. Frank said it best -- it's like a mixture of vanilla pudding and onion, with a kind of fruit-flesh/fishy texture. I tried it. It was durian-like. OK, I've tried it. The problem is that, even though I only had a bite, I kept trying it -- the taste would not go away. I ate some other stuff. Still there. Drink water, beer, whatever -- durian. After a while you can sort of get rid of the taste until you have the misfortune of burping. Durian. Stronger than ever. It keeps growing. It was well into the next day until I could taste something else. It took Terry even longer. In fact, he developed a semi-permanent association between Tiger beer and durian taste. He may never appreciate Tiger beer again. The other interesting aspect of this fruit: it smells. And it gets stronger and stronger. It was still at our table because Tom (for whom I have new respect mingled with pity) was gradually eating the leftover durian (all the durian except for one bite each from the other victims). Ingo (one of the speakers) kept asking Tom to move it further away, because the smell, while not exactly the same as the taste, is the olfactory equivalent of the taste. After you have smelled it, you smell it everywhere. For the rest of the trip, we could tell anytime we got close to durian. Terry and I would look at each other at the same time: durian. Every open air market you come to sells that stinky stuff. And you always notice it if it's near. You have been warned.

When talking to the natives in Singapore, I and a friend (Terry) heard about Durian ("Stinky fruit"), which is a local fruit. I always ask about local foods from the natives to see what I can try that I haven't had before. It is against the law in Singapore to take a durian on public transport. And it is forbidden to take the fruit inside most buildings. However, several locals at the table said they really liked it. My new mission: try some durian. We ate one night at Newton food court, which had fresh durian, so we got one. Tom, who lives in Bali , is a big fan, so he would eat it if we didn't care for it. Well, they cut it open and Tom insisted that we eat it essentially holding our nose -- the flavor and the smell are only loosely related. So I tried it. Frank said it best -- it's like a mixture of vanilla pudding and onion, with a kind of fruit-flesh/fishy texture. I tried it. It was durian-like. OK, I've tried it. The problem is that, even though I only had a bite, I kept trying it -- the taste would not go away. I ate some other stuff. Still there. Drink water, beer, whatever -- durian. After a while you can sort of get rid of the taste until you have the misfortune of burping. Durian. Stronger than ever. It keeps growing. It was well into the next day until I could taste something else. It took Terry even longer. In fact, he developed a semi-permanent association between Tiger beer and durian taste. He may never appreciate Tiger beer again. The other interesting aspect of this fruit: it smells. And it gets stronger and stronger. It was still at our table because Tom (for whom I have new respect mingled with pity) was gradually eating the leftover durian (all the durian except for one bite each from the other victims). Ingo (one of the speakers) kept asking Tom to move it further away, because the smell, while not exactly the same as the taste, is the olfactory equivalent of the taste. After you have smelled it, you smell it everywhere. For the rest of the trip, we could tell anytime we got close to durian. Terry and I would look at each other at the same time: durian. Every open air market you come to sells that stinky stuff. And you always notice it if it's near. You have been warned.